Introduction

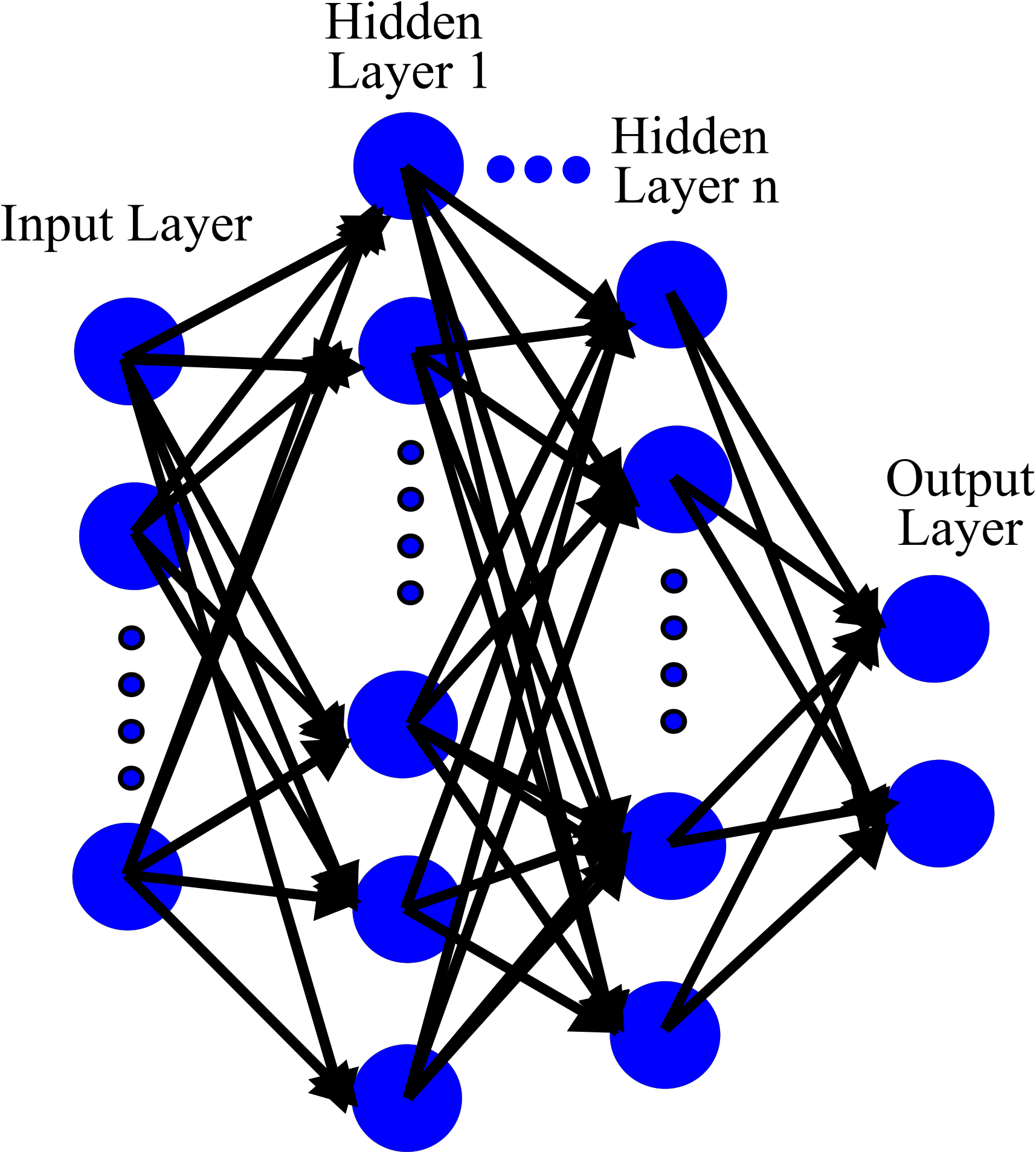

In this lecture, we discuss about artificial neural networks. But they were very shallow with a few hidden layers. In case of a very complex problem, a much deeper neural network should be trained; for example 10 layers or much more, each layer with hundreds or thousands of neurons.

This would not be an easy task because we may face some challenges:

- Vanishing gradients or exploding gradients problem.

- Requiring many training samples.

- It can be very slow and computationally expensive.

- Deep model has a higher risk of overfitting.

Vanishing/Exploding Gradients Problem¶

The backpropagation algorithm works by going from the output layer to the input layer, propagating the error gradient on the way. Once the algorithm has computed the gradient of the cost function with regards to each parameter in the network, it uses these gradients to update each parameter with a Gradient Descent step (see previous lectures).

$\large w^{u}=w-\alpha \frac{\partial MSE}{\partial w}$

Unfortunately, gradients often get smaller and smaller as the algorithm progresses down to the lower layers. As a result, the Gradient Descent update leaves the lower layer connection weights virtually unchanged, and training never converges to a good solution. This is called the vanishing gradients problem. In some cases, the opposite can happen: the gradients can grow bigger and bigger, so many layers get insanely large weight updates and the algorithm diverges. This is the exploding gradients problem.

He Initialization¶

When weights for neural network initialize randomly, there will be higher likelihood for vanishing and exploding problem. A way to resolve this problem is the variance of the outputs of each layer to be equal to the variance of its inputs. Random weights should be initialized with a variance achieved from input variance called He Initialization, or averaged of input and output variances (Glorot Initialization, which is usually used for sigmoid function that we do not consider here).

He Initialization can be simply applied in Keras by setting kernel_initializer="he_uniform" for uniform distribution or kernel_initializer="he_normal" for normal distribution as below:

keras.layers.Dense(neurons=100, activation='relu', kernel_initializer="he_uniform")

keras.layers.Dense(neurons=100, activation='relu', kernel_initializer="he_normal")

Activation Functions¶

Another reason for vanishing/exploding gradients problems is due to unreliable activation function. People had assumed that sigmoid activation functions in biological neurons was the best activation, but it was proved vanishing/exploding gradients problems usually happens for deep neural network (DNN) because of zero gradient for high negative and positive values. There are other activation functions work much better than sigmoid function for DNN. Relu activation has been widely used because it is so fast and the gradient is not zero for positive values.

from matplotlib.colors import ListedColormap

import matplotlib as mpl

import matplotlib.pyplot as plt

import pandas as pd

import numpy as np

from numpy import random

font = {'size' : 12}

plt.rc('font', **font)

fig = plt.subplots(figsize=(13.0, 4.5), dpi= 90, facecolor='w', edgecolor='k')

z1 = np.linspace(-3.5, 3.5, 100)

#

def sgd(y):

return 1 / (1 + np.exp(-y))

def relu(y):

return np.maximum(0, y)

def drivtve(f, y):

return (f(z1 + 0.000001) - f(z1 - 0.000001))/(2 * 0.000001)

#

plt.subplot(1,2,1)

plt.plot(z1, sgd(z1), "b--", linewidth=2, label="Sigmoid")

plt.plot(z1, np.tanh(z1), "r-", linewidth=2, label="Tanh")

plt.plot(z1, relu(z1), "g-.", linewidth=4, label="ReLU")

plt.grid(True)

plt.legend(loc=4, fontsize=14)

plt.title("Activation functions", fontsize=14)

plt.ylim(-1,1)

plt.subplot(1,2,2)

plt.plot(z1, drivtve(sgd, z1), "b--", linewidth=2, label="Sigmoid")

plt.plot(z1, drivtve(np.tanh, z1), "r-", linewidth=2, label="Tanh")

plt.plot(z1, drivtve(relu, z1), "g-.", linewidth=4, label="ReLU")

plt.grid(True)

plt.title("Derivative of each Activation Function ", fontsize=14)

plt.show()

Unfortunately, the Relu activation function suffers from vanishing or dying neurons: during training, the gradient of the Relu function is 0 when its input is negative so the gradient descent does not have any affect by running iteration. Leaky Relu has been developed to solve this problem. The gradient is not zero for negative values. See Figure below:

Leaky Relu¶

font = {'size' : 12}

plt.rc('font', **font)

fig = plt.subplots(figsize=(6, 4), dpi= 90, facecolor='w', edgecolor='k')

def relu_leaky(y, alpha=0.01):

return np.maximum(alpha*y, y)

y = np.linspace(-3, 3, 100)

plt.plot(y, relu_leaky(y, 0.1), "g-.", linewidth=3.5)

plt.plot([-3, 3], [0, 0], 'k-')

plt.plot([0, 0], [-0.5, 4.2], 'k-')

plt.grid(True)

p = dict(facecolor='black')

plt.annotate(r'$\alpha=Leak=0.1$', xytext=(-1.5, 0.3), xy=(-2, -0.2), arrowprops=p, fontsize=14, ha="center")

plt.title('Leaky Relu activation= ' + r'$max(\alpha x,x)$', fontsize=14)

plt.axis([-3, 3, -0.5, 3.2])

plt.show()

α = 0.2 (huge leak) usually gives better performance than α = 0.01 (small leak). This hyperparameter can be adjusted with GridsearchCV. The following code shows how Leaky Relu can be implemented:

leaky_relu = keras.layers.LeakyReLU(alpha=0.2) # Leaky Relu with alpha=0.1

keras.layers.Dense(neurons=100, activation='relu', kernel_initializer="he_uniform") # Relu activation

keras.layers.Dense(neurons=100, activation=leaky_relu, kernel_initializer="he_normal") # Leaky Relu activation

Instead of using a linear function for leak, an exponential function can be applied, called the exponential linear unit (ELU) that often gives higher performance than the all the Relue variants (see below).

Exponential Linear Unit (ELU)¶

font = {'size' : 12}

plt.rc('font', **font)

fig = plt.subplots(figsize=(6, 4), dpi= 90, facecolor='w', edgecolor='k')

def ELU(y, alpha=1.0):

return np.where(y < 0, alpha * (np.exp(y) - 1), y)

plt.plot(y, ELU(y), "g-.", linewidth=3.2)

plt.plot([-3, 3], [0, 0], 'k-')

plt.plot([-3, 3], [-1.0, -1.0], 'k--')

plt.plot([0, 0], [-2.2, 3.2], 'k-')

plt.grid(True)

plt.annotate(r'$ELU(\alpha=1)$', xytext=(-1.2, 0.3), xy=(-2.3, -0.9), arrowprops=p, fontsize=14, ha="center")

plt.title('ELU Activation Function= ' + r'$\alpha (e^{x}-1)$', fontsize=14)

plt.axis([-3,3 , -2.2, 3.2])

plt.show()

ELU can be simply implemented as below:

keras.layers.Dense(neurons=100, activation='elu', kernel_initializer="he_uniform") # Relu activation

So the question is which activation function we should use. In general, the performance for DNN is > ELU > leaky Relu (and its variants) > Relu > tanh> logistic. Gridsearchcv can also be applied to have better selection if you have time. However, Relu is commonly used for very DNN because it is so fast. See Aurélien Géron, 2019 for more information.

Batch Normalization¶

Vanishing/exploding gradients problems can be significantly reduced at the beginning of training by using He initialization along with ELU (or any variant of ReLU); however, it cannot ensure that they won’t come back during training.

Batch Normalization is another technique to help reducing vanishing/exploding gradients problems. The technique consists of adding an operation in the model just before or after the activation function of each hidden layer, simply zero-centering and normalizing each input, then scaling and shifting the result using two new parameter vectors per layer: one for scaling, the other for shifting Aurélien Géron, 2019. It can be simply implemented as below:

keras.layers.BatchNormalization()

keras.layers.Dense(neurons=100, activation='relu', kernel_initializer="he_uniform") # Relu activation

keras.layers.BatchNormalization()

keras.layers.Dense(neurons=100, activation=leaky_relu, kernel_initializer="he_normal") # Leaky Relu activation

keras.layers.BatchNormalization()

Optimizers¶

As discussed in previous lecture, Gradient Descent can be applied to optimize random weights. Training a very large deep neural network can be painfully slow. Stochastic gradient descent (SGD) can be used in DNN to speed up the training. However, SGD is so random and may not settle in global minimum.

We have seen three ways to speed up training (and reach a better solution): using a good initialization, applying a reliable activation function, applying Batch Normalization. Another significant speed boost can come from using a faster optimizer than the regular Gradient Descent optimizer. There are faster optimizer as below:

- Nesterov

- Adagrad

- Adadelta

- RMSprop

- Adam

- Nadam

- Adamax

The highlighted optimizers Adam or RMSprop are the most reliable optimizers that should be tried first for DNN (see Aurélien Géron, 2019 for more details about optimizers). Gridsearchcv can be applied to fine-tune optimizers.

Avoiding Overfitting by Regularization¶

We applied early stoping in Neural Network to avoid overfitting. There are regularization techniques to avoid overfitting as below:

Ridge (L2 ) and Lasso (L1 ) Regularization¶

Ridge (L2 ) and Lasso (L1 ) were discussed on lecture 6 Model-Training. These regularization techniques can also be applied for Neural Network. Generally L2 regularization will produce better overall performance than L1. See implementation below:

keras.layers.Dense(neurons=100, activation="elu",kernel_initializer="he_normal",kernel_regularizer=keras.regularizers.l2)

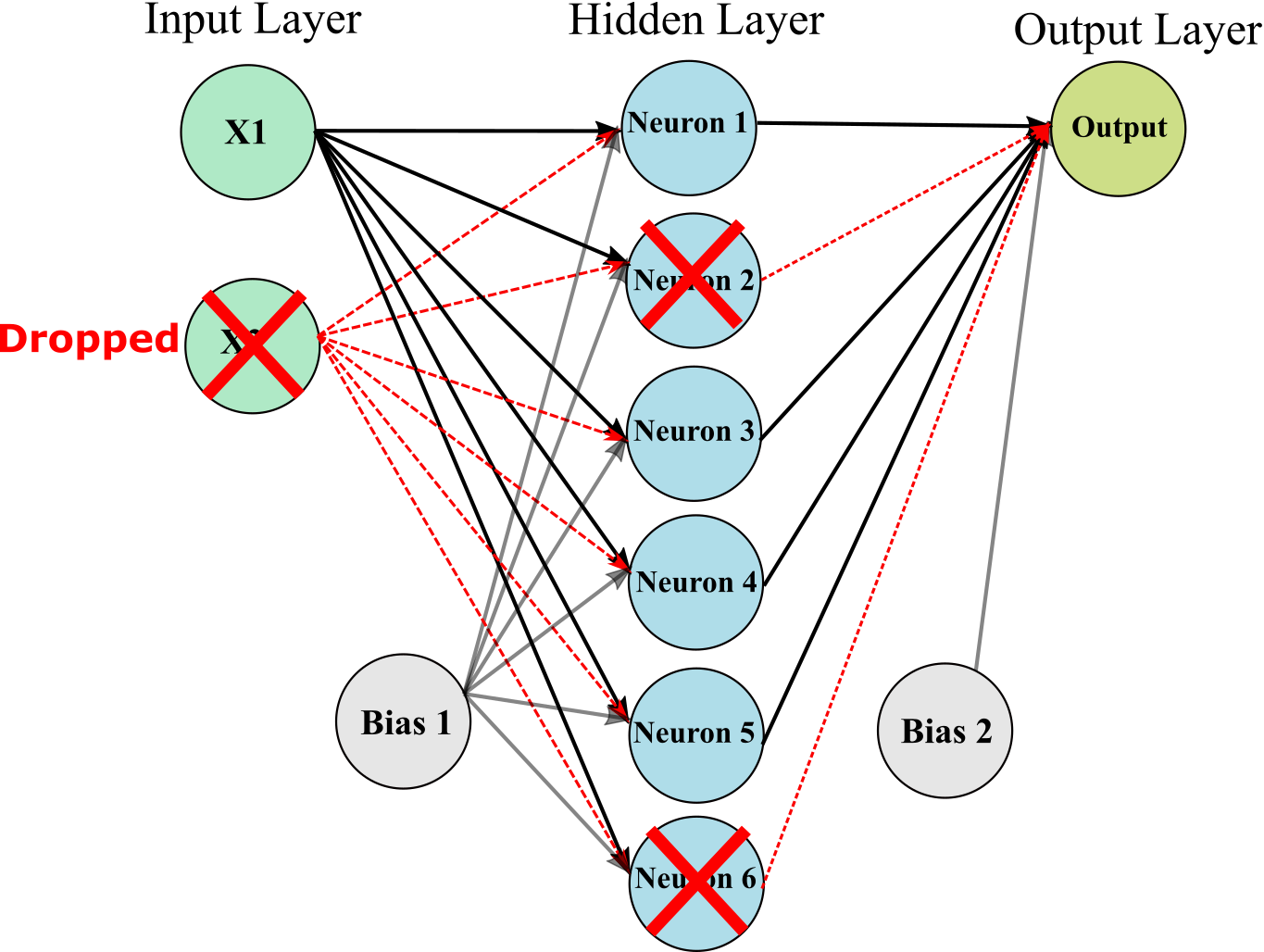

Dropout¶

One of the most popular regularization techniques for deep neural networks is Dropout. It is proven to be highly successful and efficient: 1–2% increase in accuracy can be achieved for even the state-of-the-art neural networks by adding dropout

It is very simple algorithm: at every training step, every neuron (including the input neurons, but always excluding the output neurons) has a probability p of being temporarily “dropped out,” meaning it will be entirely ignored during this training step, but it may be active during the next step. The hyperparameter p is called the dropout rate.

To implement dropout using Keras, you can use the keras.layers.Dropout layer. It randomly drops some inputs (setting them to 0). The following code implement dropout for 30 %.

keras.layers.Dense(neurons=100, activation="elu",kernel_initializer="he_normal",kernel_regularizer=keras.regularizers.l2)

keras.layers.Dropout(0.3)

Energy Efficiency Data set¶

Lets apply ANN for Energy Efficiency data set for binary classification. First get training data:

import pandas as pd

import numpy as np

from sklearn.model_selection import StratifiedShuffleSplit

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split

df = pd.read_csv('Building_Heating_Load.csv',na_values=['NA','?',' '])

np.random.seed(32)

df=df.reindex(np.random.permutation(df.index))

df.reset_index(inplace=True, drop=True)

#

df_binary=df.copy()

df_binary.drop(['Heating Load','Multi-Classes'], axis=1, inplace=True)

#

df_binary['Binary Classes']=df_binary['Binary Classes'].replace('Low Level', 0)

df_binary['Binary Classes']=df_binary['Binary Classes'].replace('High Level', 1)

# Training and Test

spt = StratifiedShuffleSplit(n_splits=1, test_size=0.2, random_state=42)

for train_idx, test_idx in spt.split(df_binary, df_binary['Binary Classes']):

train_set = df_binary.loc[train_idx]

test_set = df_binary.loc[test_idx]

#

X_train = train_set.drop("Binary Classes", axis=1)

y_train = train_set["Binary Classes"].values

#

scaler = StandardScaler()

X_train_Std=scaler.fit_transform(X_train)

# Smaller Training

# You need to divid your data to smaller training set and validation set for early stopping.

Training_c=np.concatenate((X_train_Std,np.array(y_train).reshape(-1,1)),axis=1)

Smaller_Training, Validation = train_test_split(Training_c, test_size=0.2, random_state=100)

#

Smaller_Training_Target=Smaller_Training[:,-1]

Smaller_Training=Smaller_Training[:,:-1]

#

Validation_Target=Validation[:,-1]

Validation=Validation[:,:-1]

import tensorflow as tf

from tensorflow import keras

import matplotlib.pyplot as plt

def plot(history):

font = {'size' : 10}

plt.rc('font', **font)

fig, ax=plt.subplots(figsize=(12, 4), dpi= 110, facecolor='w', edgecolor='k')

ax1 = plt.subplot(1,2,1)

history_dict = history.history

loss_values = history_dict['loss']

val_loss_values = history_dict['val_loss']

epochs = range(1, len(loss_values) + 1)

plt.plot(epochs, loss_values, 'bo', label='Training loss')

plt.plot(epochs, val_loss_values, 'r', label='Validation loss',linewidth=2)

plt.title('Training and Validation Loss',fontsize=14)

plt.xlabel('Epochs (Early Stopping)',fontsize=12)

plt.ylabel('Loss',fontsize=11)

plt.legend(fontsize='12')

# plt.ylim((0, 0.8))

ax2 = plt.subplot(1,2,2)

history_dict = history.history

loss_values = history_dict['accuracy']

val_loss_values = history_dict['val_accuracy']

epochs = range(1, len(loss_values) + 1)

ax2.plot(epochs, loss_values, 'ro', label='Training accuracy')

ax2.plot(epochs, val_loss_values, 'b', label='Validation accuracy',linewidth=2)

plt.title('Training and Validation Accuracy',fontsize=14)

plt.xlabel('Epochs (Early Stopping)',fontsize=12)

plt.ylabel('Accuracy',fontsize=12)

plt.legend(fontsize='12')

# plt.ylim((0.8, 0.99))

plt.show()

input_dim=Smaller_Training.shape[1]

neurons=50

loss="binary_crossentropy"

activation="relu"

metrics=['accuracy']

activation_out='sigmoid'

np.random.seed(42)

tf.random.set_seed(42)

keras.backend.clear_session() # Clear the previous model

model = keras.models.Sequential()

# Input & Hidden Layer 1

model.add(keras.layers.Dense(neurons,input_dim=input_dim, activation=activation))

# Hidden Layer 2

model.add(keras.layers.Dense(neurons,activation=activation))

# Hidden Layer 3

model.add(keras.layers.Dense(neurons,activation=activation))

# Hidden Layer 4

model.add(keras.layers.Dense(neurons,activation=activation))

# Hidden Layer 5

model.add(keras.layers.Dense(neurons,activation=activation))

# Output Layer

model.add(keras.layers.Dense(1,activation=activation_out))

# Compile model

model.compile(optimizer='adam',loss=loss,metrics=metrics)

# Early stopping to avoid overfitting

monitor= keras.callbacks.EarlyStopping(min_delta=1e-3,patience=3)

history=model.fit(Smaller_Training,Smaller_Training_Target,batch_size=32,validation_data=

(Validation,Validation_Target),callbacks=[monitor],verbose=1,epochs=1000)

Epoch 1/1000 16/16 [==============================] - 0s 9ms/step - loss: 0.6299 - accuracy: 0.7026 - val_loss: 0.5107 - val_accuracy: 0.7886 Epoch 2/1000 16/16 [==============================] - 0s 2ms/step - loss: 0.4022 - accuracy: 0.8126 - val_loss: 0.3251 - val_accuracy: 0.8049 Epoch 3/1000 16/16 [==============================] - 0s 2ms/step - loss: 0.2559 - accuracy: 0.8839 - val_loss: 0.2440 - val_accuracy: 0.9106 Epoch 4/1000 16/16 [==============================] - 0s 2ms/step - loss: 0.2100 - accuracy: 0.9124 - val_loss: 0.2075 - val_accuracy: 0.9106 Epoch 5/1000 16/16 [==============================] - 0s 2ms/step - loss: 0.1815 - accuracy: 0.9246 - val_loss: 0.2062 - val_accuracy: 0.9106 Epoch 6/1000 16/16 [==============================] - 0s 2ms/step - loss: 0.1625 - accuracy: 0.9369 - val_loss: 0.1906 - val_accuracy: 0.9024 Epoch 7/1000 16/16 [==============================] - 0s 2ms/step - loss: 0.1546 - accuracy: 0.9348 - val_loss: 0.2178 - val_accuracy: 0.9187 Epoch 8/1000 16/16 [==============================] - 0s 2ms/step - loss: 0.1336 - accuracy: 0.9450 - val_loss: 0.1865 - val_accuracy: 0.9268 Epoch 9/1000 16/16 [==============================] - 0s 2ms/step - loss: 0.1176 - accuracy: 0.9552 - val_loss: 0.1837 - val_accuracy: 0.9106 Epoch 10/1000 16/16 [==============================] - 0s 2ms/step - loss: 0.1017 - accuracy: 0.9674 - val_loss: 0.1792 - val_accuracy: 0.9106 Epoch 11/1000 16/16 [==============================] - 0s 2ms/step - loss: 0.0864 - accuracy: 0.9715 - val_loss: 0.2306 - val_accuracy: 0.9431 Epoch 12/1000 16/16 [==============================] - 0s 2ms/step - loss: 0.1071 - accuracy: 0.9593 - val_loss: 0.2208 - val_accuracy: 0.9350 Epoch 13/1000 16/16 [==============================] - 0s 2ms/step - loss: 0.0927 - accuracy: 0.9532 - val_loss: 0.2125 - val_accuracy: 0.9350

plot(history)

# Accuracy on training set

from sklearn.metrics import accuracy_score

pred=model.predict(X_train_Std)

pred=[1 if i >= 0.5 else 0 for i in pred]

#

acr=accuracy_score(y_train, pred)

print(acr)

0.9690553745928339

# Accuracy on test set

X_test = test_set.drop("Binary Classes", axis=1)

y_test = test_set["Binary Classes"].values

#

X_test_Std=scaler.transform(X_test)

#

pred=model.predict(X_test_Std)

pred=[1 if i >= 0.5 else 0 for i in pred]

acr=accuracy_score(y_test, pred)

print(acr)

0.948051948051948

def DNN (input_dim,neurons=50,loss="binary_crossentropy",activation="relu",Nout=1,L2_regularizer=False,

metrics=['accuracy'],activation_out='sigmoid',init_mode=None,BatchOpt=False,dropout_rate=False):

""" Function to run Deep Neural Network (5 hidden layer) for different hyperparameters"""

np.random.seed(42)

tf.random.set_seed(42)

if(activation=='Leaky_relu'): activation = keras.layers.LeakyReLU(alpha=0.2)

if(L2_regularizer): kernel_regularizer=keras.regularizers.l2()

else: kernel_regularizer=None

# create model

model = keras.models.Sequential()

# Input & Hidden Layer 1

model.add(keras.layers.Dense(neurons,input_dim=input_dim, activation=activation,

kernel_initializer=init_mode,kernel_regularizer=kernel_regularizer))

if(BatchOpt): model.add(keras.layers.BatchNormalization())

if(dropout_rate): model.add(keras.layers.Dropout(dropout_rate))

# Hidden Layer 2

model.add(keras.layers.Dense(neurons,activation=activation,

kernel_initializer=init_mode,kernel_regularizer=kernel_regularizer))

if(BatchOpt): model.add(keras.layers.BatchNormalization())

if(dropout_rate): model.add(keras.layers.Dropout(dropout_rate))

# Hidden Layer 3

model.add(keras.layers.Dense(neurons,activation=activation,

kernel_initializer=init_mode,kernel_regularizer=kernel_regularizer))

if(BatchOpt): model.add(keras.layers.BatchNormalization())

if(dropout_rate): model.add(keras.layers.Dropout(dropout_rate))

# Hidden Layer 4

model.add(keras.layers.Dense(neurons,activation=activation,

kernel_initializer=init_mode,kernel_regularizer=kernel_regularizer))

if(BatchOpt): model.add(keras.layers.BatchNormalization())

if(dropout_rate): model.add(keras.layers.Dropout(dropout_rate))

# Hidden Layer 5

model.add(keras.layers.Dense(neurons,activation=activation,

kernel_initializer=init_mode,kernel_regularizer=kernel_regularizer))

if(BatchOpt): model.add(keras.layers.BatchNormalization())

if(dropout_rate): model.add(keras.layers.Dropout(dropout_rate))

# Output Layer

model.add(keras.layers.Dense(Nout,activation=activation_out))

# Compile model

model.compile(optimizer='adam',loss=loss,metrics=metrics)

return model

Fine Tune Hyperparameters¶

Classification¶

from keras.wrappers.scikit_learn import KerasClassifier

from sklearn.model_selection import GridSearchCV

from sklearn.model_selection import RandomizedSearchCV

import warnings

warnings.filterwarnings('ignore')

# define the grid search parameters

#param_grid = {'init_mode' : ['he_normal', 'he_uniform'],'neurons' : [150,500,1000]

# ,'dropout_rate' : [False,0.3, 0.4,0.5],'BatchOpt':[True,False],

# 'activation' : ['relu','elu', 'Leaky_relu'],'L2_regularizer':[True,False]}

param_grid = {'init_mode' : [None,'he_normal', 'he_uniform'],'neurons' : [50,100,150],'L2_regularizer':[True,False]

,'dropout_rate' : [False, 0.3, 0.4],'activation': ['relu','elu', 'Leaky_relu']}

# Run Keras Classifier

model = KerasClassifier(build_fn=DNN,input_dim=Smaller_Training.shape[1])

# Apply Scikit Learn GridSearchCV

#grid = GridSearchCV(model,param_grid, cv=2, scoring='accuracy')

grid = RandomizedSearchCV(model,param_grid,n_iter=40, cv=2, scoring='accuracy')

# Early stopping to avoid overfitting

monitor= keras.callbacks.EarlyStopping(min_delta=1e-3,patience=5, verbose=0)

grid_result = grid.fit(Smaller_Training,Smaller_Training_Target,batch_size=32,validation_data=

(Validation,Validation_Target),callbacks=[monitor],verbose=0,epochs=1000)

WARNING:tensorflow:From C:\Anaconda\lib\site-packages\tensorflow\python\keras\wrappers\scikit_learn.py:241: Sequential.predict_classes (from tensorflow.python.keras.engine.sequential) is deprecated and will be removed after 2021-01-01.

Instructions for updating:

Please use instead:* `np.argmax(model.predict(x), axis=-1)`, if your model does multi-class classification (e.g. if it uses a `softmax` last-layer activation).* `(model.predict(x) > 0.5).astype("int32")`, if your model does binary classification (e.g. if it uses a `sigmoid` last-layer activation).

grid_result.best_params_

{'neurons': 50,

'init_mode': 'he_normal',

'dropout_rate': 0.4,

'activation': 'Leaky_relu',

'L2_regularizer': True}

Accuracy=grid_result.best_score_

print('Accuracy from SearchCV: ',Accuracy)

Accuracy from SearchCV: 0.9552347768375643

#cvreslt=grid_result.cv_results_

#cvreslt_params=[str(i) for i in cvreslt["params"]]

#for mean_score, params in sorted(zip(cvreslt["mean_test_score"], cvreslt_params)):

# print(np.sqrt(mean_score), params)

model_DNN = DNN (input_dim=Smaller_Training.shape[1],neurons=50,Nout=1,L2_regularizer= True,

init_mode= 'he_normal', dropout_rate= 0.4,activation= 'Leaky_relu')

# Early stopping to avoid overfitting

monitor= keras.callbacks.EarlyStopping(min_delta=1e-3,patience=3)

history=model_DNN.fit(Smaller_Training,Smaller_Training_Target,batch_size=32,validation_data=

(Validation,Validation_Target),callbacks=[monitor],verbose=1,epochs=1000)

Epoch 1/1000 16/16 [==============================] - 0s 23ms/step - loss: 7.2438 - accuracy: 0.5173 - val_loss: 5.6480 - val_accuracy: 0.7886 Epoch 2/1000 16/16 [==============================] - 0s 5ms/step - loss: 6.2205 - accuracy: 0.6558 - val_loss: 5.3534 - val_accuracy: 0.7886 Epoch 3/1000 16/16 [==============================] - 0s 5ms/step - loss: 5.7808 - accuracy: 0.7108 - val_loss: 5.1099 - val_accuracy: 0.7967 Epoch 4/1000 16/16 [==============================] - 0s 5ms/step - loss: 5.3389 - accuracy: 0.7515 - val_loss: 4.9813 - val_accuracy: 0.8130 Epoch 5/1000 16/16 [==============================] - 0s 6ms/step - loss: 5.2044 - accuracy: 0.7352 - val_loss: 4.8732 - val_accuracy: 0.8455 Epoch 6/1000 16/16 [==============================] - 0s 6ms/step - loss: 5.1710 - accuracy: 0.7393 - val_loss: 4.7664 - val_accuracy: 0.8374 Epoch 7/1000 16/16 [==============================] - 0s 5ms/step - loss: 5.0138 - accuracy: 0.7576 - val_loss: 4.6645 - val_accuracy: 0.8455 Epoch 8/1000 16/16 [==============================] - 0s 6ms/step - loss: 4.8114 - accuracy: 0.7780 - val_loss: 4.5619 - val_accuracy: 0.8455 Epoch 9/1000 16/16 [==============================] - 0s 6ms/step - loss: 4.6222 - accuracy: 0.7862 - val_loss: 4.4599 - val_accuracy: 0.8537 Epoch 10/1000 16/16 [==============================] - 0s 5ms/step - loss: 4.5346 - accuracy: 0.7984 - val_loss: 4.3572 - val_accuracy: 0.8618 Epoch 11/1000 16/16 [==============================] - 0s 5ms/step - loss: 4.4613 - accuracy: 0.7841 - val_loss: 4.2570 - val_accuracy: 0.8699 Epoch 12/1000 16/16 [==============================] - 0s 5ms/step - loss: 4.2972 - accuracy: 0.8065 - val_loss: 4.1529 - val_accuracy: 0.8699 Epoch 13/1000 16/16 [==============================] - 0s 6ms/step - loss: 4.1831 - accuracy: 0.8248 - val_loss: 4.0478 - val_accuracy: 0.8862 Epoch 14/1000 16/16 [==============================] - 0s 5ms/step - loss: 4.0671 - accuracy: 0.8167 - val_loss: 3.9433 - val_accuracy: 0.8780 Epoch 15/1000 16/16 [==============================] - 0s 6ms/step - loss: 3.9907 - accuracy: 0.8126 - val_loss: 3.8472 - val_accuracy: 0.8862 Epoch 16/1000 16/16 [==============================] - 0s 6ms/step - loss: 3.8616 - accuracy: 0.8330 - val_loss: 3.7467 - val_accuracy: 0.9106 Epoch 17/1000 16/16 [==============================] - 0s 5ms/step - loss: 3.7224 - accuracy: 0.8595 - val_loss: 3.6488 - val_accuracy: 0.9024 Epoch 18/1000 16/16 [==============================] - 0s 6ms/step - loss: 3.6893 - accuracy: 0.8065 - val_loss: 3.5539 - val_accuracy: 0.9024 Epoch 19/1000 16/16 [==============================] - 0s 5ms/step - loss: 3.5481 - accuracy: 0.8513 - val_loss: 3.4619 - val_accuracy: 0.9024 Epoch 20/1000 16/16 [==============================] - 0s 5ms/step - loss: 3.4628 - accuracy: 0.8269 - val_loss: 3.3696 - val_accuracy: 0.9106 Epoch 21/1000 16/16 [==============================] - 0s 6ms/step - loss: 3.3796 - accuracy: 0.8534 - val_loss: 3.2793 - val_accuracy: 0.9024 Epoch 22/1000 16/16 [==============================] - 0s 6ms/step - loss: 3.2951 - accuracy: 0.8126 - val_loss: 3.1904 - val_accuracy: 0.8862 Epoch 23/1000 16/16 [==============================] - 0s 5ms/step - loss: 3.1819 - accuracy: 0.8371 - val_loss: 3.1000 - val_accuracy: 0.8943 Epoch 24/1000 16/16 [==============================] - 0s 6ms/step - loss: 3.0754 - accuracy: 0.8615 - val_loss: 3.0115 - val_accuracy: 0.8943 Epoch 25/1000 16/16 [==============================] - 0s 6ms/step - loss: 2.9731 - accuracy: 0.8839 - val_loss: 2.9267 - val_accuracy: 0.8943 Epoch 26/1000 16/16 [==============================] - 0s 6ms/step - loss: 2.8915 - accuracy: 0.8778 - val_loss: 2.8423 - val_accuracy: 0.8862 Epoch 27/1000 16/16 [==============================] - 0s 5ms/step - loss: 2.8337 - accuracy: 0.8697 - val_loss: 2.7625 - val_accuracy: 0.8862 Epoch 28/1000 16/16 [==============================] - 0s 5ms/step - loss: 2.7554 - accuracy: 0.8656 - val_loss: 2.6861 - val_accuracy: 0.9024 Epoch 29/1000 16/16 [==============================] - 0s 6ms/step - loss: 2.6903 - accuracy: 0.8554 - val_loss: 2.6118 - val_accuracy: 0.9024 Epoch 30/1000 16/16 [==============================] - 0s 7ms/step - loss: 2.5884 - accuracy: 0.8574 - val_loss: 2.5336 - val_accuracy: 0.8943 Epoch 31/1000 16/16 [==============================] - 0s 6ms/step - loss: 2.5133 - accuracy: 0.8635 - val_loss: 2.4595 - val_accuracy: 0.8862 Epoch 32/1000 16/16 [==============================] - 0s 6ms/step - loss: 2.4643 - accuracy: 0.8493 - val_loss: 2.3892 - val_accuracy: 0.9106 Epoch 33/1000 16/16 [==============================] - 0s 6ms/step - loss: 2.3618 - accuracy: 0.8717 - val_loss: 2.3155 - val_accuracy: 0.9106 Epoch 34/1000 16/16 [==============================] - 0s 6ms/step - loss: 2.2916 - accuracy: 0.8798 - val_loss: 2.2442 - val_accuracy: 0.9106 Epoch 35/1000 16/16 [==============================] - 0s 6ms/step - loss: 2.2533 - accuracy: 0.8697 - val_loss: 2.1753 - val_accuracy: 0.9106 Epoch 36/1000 16/16 [==============================] - 0s 6ms/step - loss: 2.1899 - accuracy: 0.8758 - val_loss: 2.1105 - val_accuracy: 0.9024 Epoch 37/1000 16/16 [==============================] - 0s 6ms/step - loss: 2.0972 - accuracy: 0.8839 - val_loss: 2.0492 - val_accuracy: 0.9106 Epoch 38/1000 16/16 [==============================] - 0s 6ms/step - loss: 2.0497 - accuracy: 0.8758 - val_loss: 1.9875 - val_accuracy: 0.9106 Epoch 39/1000 16/16 [==============================] - 0s 6ms/step - loss: 1.9856 - accuracy: 0.8697 - val_loss: 1.9291 - val_accuracy: 0.9106 Epoch 40/1000 16/16 [==============================] - 0s 5ms/step - loss: 1.9277 - accuracy: 0.8676 - val_loss: 1.8725 - val_accuracy: 0.9106 Epoch 41/1000 16/16 [==============================] - 0s 5ms/step - loss: 1.8820 - accuracy: 0.8656 - val_loss: 1.8156 - val_accuracy: 0.9106 Epoch 42/1000 16/16 [==============================] - 0s 6ms/step - loss: 1.8179 - accuracy: 0.8778 - val_loss: 1.7604 - val_accuracy: 0.9024 Epoch 43/1000 16/16 [==============================] - 0s 5ms/step - loss: 1.7660 - accuracy: 0.8615 - val_loss: 1.7082 - val_accuracy: 0.9024 Epoch 44/1000 16/16 [==============================] - 0s 5ms/step - loss: 1.7076 - accuracy: 0.8819 - val_loss: 1.6572 - val_accuracy: 0.9024 Epoch 45/1000 16/16 [==============================] - 0s 5ms/step - loss: 1.6567 - accuracy: 0.8778 - val_loss: 1.6078 - val_accuracy: 0.9024 Epoch 46/1000 16/16 [==============================] - 0s 5ms/step - loss: 1.6279 - accuracy: 0.8635 - val_loss: 1.5583 - val_accuracy: 0.8943 Epoch 47/1000 16/16 [==============================] - 0s 5ms/step - loss: 1.5475 - accuracy: 0.8859 - val_loss: 1.5110 - val_accuracy: 0.8943 Epoch 48/1000 16/16 [==============================] - 0s 5ms/step - loss: 1.5162 - accuracy: 0.8941 - val_loss: 1.4687 - val_accuracy: 0.8943 Epoch 49/1000 16/16 [==============================] - 0s 6ms/step - loss: 1.4688 - accuracy: 0.8941 - val_loss: 1.4264 - val_accuracy: 0.8943 Epoch 50/1000 16/16 [==============================] - 0s 5ms/step - loss: 1.4274 - accuracy: 0.8859 - val_loss: 1.3863 - val_accuracy: 0.8862 Epoch 51/1000 16/16 [==============================] - 0s 5ms/step - loss: 1.3822 - accuracy: 0.8921 - val_loss: 1.3459 - val_accuracy: 0.8862 Epoch 52/1000 16/16 [==============================] - 0s 5ms/step - loss: 1.3464 - accuracy: 0.8961 - val_loss: 1.3047 - val_accuracy: 0.8862 Epoch 53/1000 16/16 [==============================] - 0s 6ms/step - loss: 1.3007 - accuracy: 0.8839 - val_loss: 1.2664 - val_accuracy: 0.8862 Epoch 54/1000 16/16 [==============================] - 0s 5ms/step - loss: 1.2616 - accuracy: 0.8880 - val_loss: 1.2298 - val_accuracy: 0.8862 Epoch 55/1000 16/16 [==============================] - 0s 6ms/step - loss: 1.2377 - accuracy: 0.8859 - val_loss: 1.1955 - val_accuracy: 0.8862 Epoch 56/1000 16/16 [==============================] - 0s 5ms/step - loss: 1.2188 - accuracy: 0.8859 - val_loss: 1.1643 - val_accuracy: 0.8862 Epoch 57/1000 16/16 [==============================] - ETA: 0s - loss: 1.1867 - accuracy: 0.87 - 0s 6ms/step - loss: 1.1564 - accuracy: 0.9002 - val_loss: 1.1312 - val_accuracy: 0.8862 Epoch 58/1000 16/16 [==============================] - 0s 6ms/step - loss: 1.1519 - accuracy: 0.8880 - val_loss: 1.0995 - val_accuracy: 0.8862 Epoch 59/1000 16/16 [==============================] - 0s 6ms/step - loss: 1.0893 - accuracy: 0.8880 - val_loss: 1.0698 - val_accuracy: 0.8862 Epoch 60/1000 16/16 [==============================] - 0s 6ms/step - loss: 1.0736 - accuracy: 0.8941 - val_loss: 1.0394 - val_accuracy: 0.8862 Epoch 61/1000 16/16 [==============================] - 0s 6ms/step - loss: 1.0358 - accuracy: 0.8961 - val_loss: 1.0117 - val_accuracy: 0.8862 Epoch 62/1000 16/16 [==============================] - 0s 5ms/step - loss: 1.0184 - accuracy: 0.8737 - val_loss: 0.9855 - val_accuracy: 0.8862 Epoch 63/1000 16/16 [==============================] - 0s 7ms/step - loss: 0.9921 - accuracy: 0.9043 - val_loss: 0.9603 - val_accuracy: 0.8862 Epoch 64/1000 16/16 [==============================] - 0s 7ms/step - loss: 0.9572 - accuracy: 0.8880 - val_loss: 0.9355 - val_accuracy: 0.8862 Epoch 65/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.9274 - accuracy: 0.9063 - val_loss: 0.9091 - val_accuracy: 0.8862 Epoch 66/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.8931 - accuracy: 0.9104 - val_loss: 0.8845 - val_accuracy: 0.8862 Epoch 67/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.8582 - accuracy: 0.9185 - val_loss: 0.8658 - val_accuracy: 0.8943 Epoch 68/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.8680 - accuracy: 0.9043 - val_loss: 0.8424 - val_accuracy: 0.8943 Epoch 69/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.8521 - accuracy: 0.8941 - val_loss: 0.8191 - val_accuracy: 0.8862 Epoch 70/1000 16/16 [==============================] - 0s 7ms/step - loss: 0.8107 - accuracy: 0.9084 - val_loss: 0.7954 - val_accuracy: 0.8862 Epoch 71/1000 16/16 [==============================] - 0s 5ms/step - loss: 0.7960 - accuracy: 0.9002 - val_loss: 0.7770 - val_accuracy: 0.8862 Epoch 72/1000 16/16 [==============================] - 0s 5ms/step - loss: 0.7849 - accuracy: 0.9084 - val_loss: 0.7607 - val_accuracy: 0.9024 Epoch 73/1000 16/16 [==============================] - 0s 5ms/step - loss: 0.7537 - accuracy: 0.9002 - val_loss: 0.7408 - val_accuracy: 0.8862 Epoch 74/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.7341 - accuracy: 0.9104 - val_loss: 0.7285 - val_accuracy: 0.9106 Epoch 75/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.7341 - accuracy: 0.9022 - val_loss: 0.7113 - val_accuracy: 0.8943 Epoch 76/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.7385 - accuracy: 0.9022 - val_loss: 0.6971 - val_accuracy: 0.9106 Epoch 77/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.6800 - accuracy: 0.9185 - val_loss: 0.6867 - val_accuracy: 0.9024 Epoch 78/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.6763 - accuracy: 0.9022 - val_loss: 0.6682 - val_accuracy: 0.8943 Epoch 79/1000 16/16 [==============================] - 0s 5ms/step - loss: 0.6403 - accuracy: 0.9104 - val_loss: 0.6545 - val_accuracy: 0.9024 Epoch 80/1000 16/16 [==============================] - 0s 5ms/step - loss: 0.6531 - accuracy: 0.9063 - val_loss: 0.6411 - val_accuracy: 0.9106 Epoch 81/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.6439 - accuracy: 0.9063 - val_loss: 0.6242 - val_accuracy: 0.9106 Epoch 82/1000 16/16 [==============================] - 0s 5ms/step - loss: 0.6101 - accuracy: 0.9246 - val_loss: 0.6117 - val_accuracy: 0.9106 Epoch 83/1000 16/16 [==============================] - 0s 5ms/step - loss: 0.6107 - accuracy: 0.9124 - val_loss: 0.6022 - val_accuracy: 0.9106 Epoch 84/1000 16/16 [==============================] - 0s 5ms/step - loss: 0.5813 - accuracy: 0.9267 - val_loss: 0.5922 - val_accuracy: 0.9024 Epoch 85/1000 16/16 [==============================] - 0s 5ms/step - loss: 0.5640 - accuracy: 0.9084 - val_loss: 0.5813 - val_accuracy: 0.9106 Epoch 86/1000 16/16 [==============================] - 0s 7ms/step - loss: 0.5601 - accuracy: 0.9226 - val_loss: 0.5708 - val_accuracy: 0.9106 Epoch 87/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.5485 - accuracy: 0.9328 - val_loss: 0.5603 - val_accuracy: 0.9106 Epoch 88/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.5374 - accuracy: 0.9267 - val_loss: 0.5445 - val_accuracy: 0.9106 Epoch 89/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.5237 - accuracy: 0.9226 - val_loss: 0.5290 - val_accuracy: 0.9187 Epoch 90/1000 16/16 [==============================] - 0s 5ms/step - loss: 0.5149 - accuracy: 0.9287 - val_loss: 0.5214 - val_accuracy: 0.9106 Epoch 91/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.4987 - accuracy: 0.9308 - val_loss: 0.5168 - val_accuracy: 0.9106 Epoch 92/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.4884 - accuracy: 0.9369 - val_loss: 0.5092 - val_accuracy: 0.9431 Epoch 93/1000 16/16 [==============================] - 0s 5ms/step - loss: 0.4644 - accuracy: 0.9430 - val_loss: 0.4945 - val_accuracy: 0.9187 Epoch 94/1000 16/16 [==============================] - 0s 5ms/step - loss: 0.4782 - accuracy: 0.9287 - val_loss: 0.4817 - val_accuracy: 0.9187 Epoch 95/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.4594 - accuracy: 0.9226 - val_loss: 0.4749 - val_accuracy: 0.9350 Epoch 96/1000 16/16 [==============================] - 0s 5ms/step - loss: 0.4708 - accuracy: 0.9308 - val_loss: 0.4677 - val_accuracy: 0.9268 Epoch 97/1000 16/16 [==============================] - 0s 5ms/step - loss: 0.4574 - accuracy: 0.9328 - val_loss: 0.4597 - val_accuracy: 0.9431 Epoch 98/1000 16/16 [==============================] - 0s 5ms/step - loss: 0.4398 - accuracy: 0.9246 - val_loss: 0.4537 - val_accuracy: 0.9268 Epoch 99/1000 16/16 [==============================] - 0s 5ms/step - loss: 0.4325 - accuracy: 0.9450 - val_loss: 0.4441 - val_accuracy: 0.9593 Epoch 100/1000 16/16 [==============================] - 0s 5ms/step - loss: 0.4233 - accuracy: 0.9389 - val_loss: 0.4415 - val_accuracy: 0.9268 Epoch 101/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.4243 - accuracy: 0.9226 - val_loss: 0.4377 - val_accuracy: 0.9268 Epoch 102/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.4111 - accuracy: 0.9287 - val_loss: 0.4300 - val_accuracy: 0.9268 Epoch 103/1000 16/16 [==============================] - 0s 9ms/step - loss: 0.4037 - accuracy: 0.9328 - val_loss: 0.4268 - val_accuracy: 0.9512 Epoch 104/1000 16/16 [==============================] - 0s 8ms/step - loss: 0.4062 - accuracy: 0.9246 - val_loss: 0.4164 - val_accuracy: 0.9268 Epoch 105/1000 16/16 [==============================] - 0s 8ms/step - loss: 0.4033 - accuracy: 0.9348 - val_loss: 0.4145 - val_accuracy: 0.9268 Epoch 106/1000 16/16 [==============================] - 0s 8ms/step - loss: 0.3970 - accuracy: 0.9287 - val_loss: 0.4109 - val_accuracy: 0.9187 Epoch 107/1000 16/16 [==============================] - 0s 9ms/step - loss: 0.3842 - accuracy: 0.9328 - val_loss: 0.4060 - val_accuracy: 0.9350 Epoch 108/1000 16/16 [==============================] - 0s 7ms/step - loss: 0.3892 - accuracy: 0.9287 - val_loss: 0.3976 - val_accuracy: 0.9431 Epoch 109/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.3727 - accuracy: 0.9369 - val_loss: 0.3899 - val_accuracy: 0.9431 Epoch 110/1000 16/16 [==============================] - 0s 8ms/step - loss: 0.3897 - accuracy: 0.9308 - val_loss: 0.3903 - val_accuracy: 0.9431 Epoch 111/1000 16/16 [==============================] - 0s 8ms/step - loss: 0.3606 - accuracy: 0.9389 - val_loss: 0.3883 - val_accuracy: 0.9431 Epoch 112/1000 16/16 [==============================] - 0s 7ms/step - loss: 0.3603 - accuracy: 0.9430 - val_loss: 0.3866 - val_accuracy: 0.9431 Epoch 113/1000 16/16 [==============================] - 0s 7ms/step - loss: 0.3659 - accuracy: 0.9287 - val_loss: 0.3818 - val_accuracy: 0.9431 Epoch 114/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.3554 - accuracy: 0.9328 - val_loss: 0.3745 - val_accuracy: 0.9431 Epoch 115/1000 16/16 [==============================] - 0s 7ms/step - loss: 0.3467 - accuracy: 0.9430 - val_loss: 0.3693 - val_accuracy: 0.9431 Epoch 116/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.3429 - accuracy: 0.9328 - val_loss: 0.3680 - val_accuracy: 0.9431 Epoch 117/1000 16/16 [==============================] - 0s 7ms/step - loss: 0.3399 - accuracy: 0.9389 - val_loss: 0.3657 - val_accuracy: 0.9268 Epoch 118/1000 16/16 [==============================] - 0s 8ms/step - loss: 0.3414 - accuracy: 0.9511 - val_loss: 0.3696 - val_accuracy: 0.9431 Epoch 119/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.3456 - accuracy: 0.9389 - val_loss: 0.3646 - val_accuracy: 0.9350 Epoch 120/1000 16/16 [==============================] - 0s 7ms/step - loss: 0.3440 - accuracy: 0.9348 - val_loss: 0.3584 - val_accuracy: 0.9431 Epoch 121/1000 16/16 [==============================] - 0s 7ms/step - loss: 0.3253 - accuracy: 0.9470 - val_loss: 0.3543 - val_accuracy: 0.9431 Epoch 122/1000 16/16 [==============================] - 0s 9ms/step - loss: 0.3279 - accuracy: 0.9491 - val_loss: 0.3528 - val_accuracy: 0.9350 Epoch 123/1000 16/16 [==============================] - 0s 9ms/step - loss: 0.3283 - accuracy: 0.9369 - val_loss: 0.3470 - val_accuracy: 0.9431 Epoch 124/1000 16/16 [==============================] - 0s 8ms/step - loss: 0.3326 - accuracy: 0.9287 - val_loss: 0.3419 - val_accuracy: 0.9431 Epoch 125/1000 16/16 [==============================] - 0s 7ms/step - loss: 0.3235 - accuracy: 0.9450 - val_loss: 0.3352 - val_accuracy: 0.9431 Epoch 126/1000 16/16 [==============================] - 0s 7ms/step - loss: 0.3150 - accuracy: 0.9369 - val_loss: 0.3322 - val_accuracy: 0.9593 Epoch 127/1000 16/16 [==============================] - 0s 7ms/step - loss: 0.3253 - accuracy: 0.9348 - val_loss: 0.3368 - val_accuracy: 0.9187 Epoch 128/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.3214 - accuracy: 0.9308 - val_loss: 0.3337 - val_accuracy: 0.9431 Epoch 129/1000 16/16 [==============================] - 0s 5ms/step - loss: 0.3190 - accuracy: 0.9328 - val_loss: 0.3301 - val_accuracy: 0.9350 Epoch 130/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.3034 - accuracy: 0.9450 - val_loss: 0.3351 - val_accuracy: 0.9431 Epoch 131/1000 16/16 [==============================] - 0s 5ms/step - loss: 0.3088 - accuracy: 0.9430 - val_loss: 0.3338 - val_accuracy: 0.9350 Epoch 132/1000 16/16 [==============================] - 0s 6ms/step - loss: 0.2985 - accuracy: 0.9552 - val_loss: 0.3292 - val_accuracy: 0.9431

pred=model_DNN.predict(X_train_Std)

pred=[1 if i >= 0.5 else 0 for i in pred]

acr=accuracy_score(y_train, pred)

print('Accurcay of Training: ',acr)

# Accuracy on test set

pred=model_DNN.predict(X_test_Std)

pred=[1 if i >= 0.5 else 0 for i in pred]

acr=accuracy_score(y_test, pred)

print('Accurcay of Test: ',acr)

Accurcay of Training: 0.9560260586319218 Accurcay of Test: 0.961038961038961

Regression¶

df_reg=df.copy()

df_reg.drop(['Binary Classes','Multi-Classes'], axis=1, inplace=True)

# Training and Test

train_set, test_set = train_test_split(df_reg, test_size=0.2, random_state=42)

#

X_train = train_set.drop("Heating Load", axis=1)

y_train = train_set["Heating Load"].values

#

scaler = StandardScaler()

X_train_Std=scaler.fit_transform(X_train)

# Smaller Training

# You need to divid your data to smaller training set and validation set for early stopping.

Training_c=np.concatenate((X_train_Std,np.array(y_train).reshape(-1,1)),axis=1)

Smaller_Training, Validation = train_test_split(Training_c, test_size=0.2, random_state=100)

#

Smaller_Training_Target=Smaller_Training[:,-1]

Smaller_Training=Smaller_Training[:,:-1]

#

Validation_Target=Validation[:,-1]

Validation=Validation[:,:-1]

from keras.wrappers.scikit_learn import KerasRegressor

from sklearn.model_selection import RandomizedSearchCV

import warnings

warnings.filterwarnings('ignore')

# define the grid search parameters

#param_grid = {'init_mode' : ['he_normal', 'he_uniform'],'neurons' : [150,500,1000]

# ,'dropout_rate' : [False,0.3, 0.4,0.5],'BatchOpt':[True,False],

# 'activation' : ['relu','elu', 'Leaky_relu'],'L2_regularizer':[True,False]}

param_grid = {'init_mode' : [None,'he_normal', 'he_uniform'],'neurons' : [50,100,150],'L2_regularizer':[True,False]

,'dropout_rate' : [False, 0.3, 0.4],'activation': ['relu','elu', 'Leaky_relu']}

# Run Keras KerasRegressor

model = KerasRegressor(build_fn=DNN,input_dim=Smaller_Training.shape[1],metrics=None,

activation_out=None,loss='mse')

# Apply Scikit Learn RandomizedSearchCV

grid = RandomizedSearchCV(model,param_grid,n_iter=40,scoring='neg_mean_squared_error', cv=2)

# Early stopping to avoid overfitting

monitor= keras.callbacks.EarlyStopping(min_delta=1e-3,patience=5, verbose=0)

grid_result = grid.fit(Smaller_Training,Smaller_Training_Target,batch_size=32,validation_data=

(Validation,Validation_Target),callbacks=[monitor],verbose=0,epochs=1000)

grid_result.best_params_

{'neurons': 50,

'init_mode': 'he_normal',

'dropout_rate': False,

'activation': 'elu',

'L2_regularizer': True}

rmse=np.sqrt(-grid_result.best_score_)

print('rmse from SearchCV: ',rmse)

rmse from SearchCV: 0.9921502320514267

model_DNN = DNN (input_dim=Smaller_Training.shape[1],neurons=50,Nout=1,L2_regularizer= True,

init_mode= 'he_normal', dropout_rate= False,activation= 'elu',metrics=None,

activation_out=None,loss='mse')

# Early stopping to avoid overfitting

monitor= keras.callbacks.EarlyStopping(min_delta=1e-3,patience=3)

history=model_DNN.fit(Smaller_Training,Smaller_Training_Target,batch_size=32,validation_data=

(Validation,Validation_Target),callbacks=[monitor],verbose=1,epochs=1000)

Epoch 1/1000 16/16 [==============================] - 0s 19ms/step - loss: 361.1214 - val_loss: 116.4283 Epoch 2/1000 16/16 [==============================] - 0s 6ms/step - loss: 73.0577 - val_loss: 52.1362 Epoch 3/1000 16/16 [==============================] - 0s 5ms/step - loss: 33.0666 - val_loss: 27.2461 Epoch 4/1000 16/16 [==============================] - 0s 5ms/step - loss: 25.6548 - val_loss: 23.5233 Epoch 5/1000 16/16 [==============================] - 0s 5ms/step - loss: 21.2136 - val_loss: 19.3161 Epoch 6/1000 16/16 [==============================] - 0s 5ms/step - loss: 18.6434 - val_loss: 17.7504 Epoch 7/1000 16/16 [==============================] - 0s 5ms/step - loss: 16.7700 - val_loss: 15.2312 Epoch 8/1000 16/16 [==============================] - 0s 5ms/step - loss: 15.3054 - val_loss: 14.2770 Epoch 9/1000 16/16 [==============================] - 0s 5ms/step - loss: 14.2476 - val_loss: 12.8281 Epoch 10/1000 16/16 [==============================] - 0s 5ms/step - loss: 13.4733 - val_loss: 12.2389 Epoch 11/1000 16/16 [==============================] - 0s 5ms/step - loss: 12.8268 - val_loss: 11.7605 Epoch 12/1000 16/16 [==============================] - 0s 5ms/step - loss: 12.6046 - val_loss: 11.4566 Epoch 13/1000 16/16 [==============================] - 0s 5ms/step - loss: 12.2183 - val_loss: 11.4536 Epoch 14/1000 16/16 [==============================] - 0s 5ms/step - loss: 11.9643 - val_loss: 11.1577 Epoch 15/1000 16/16 [==============================] - 0s 4ms/step - loss: 11.7187 - val_loss: 11.4537 Epoch 16/1000 16/16 [==============================] - 0s 5ms/step - loss: 11.9724 - val_loss: 11.4117 Epoch 17/1000 16/16 [==============================] - 0s 5ms/step - loss: 11.4273 - val_loss: 10.7460 Epoch 18/1000 16/16 [==============================] - 0s 5ms/step - loss: 11.2025 - val_loss: 10.8042 Epoch 19/1000 16/16 [==============================] - 0s 5ms/step - loss: 11.3318 - val_loss: 11.4890 Epoch 20/1000 16/16 [==============================] - 0s 5ms/step - loss: 10.9680 - val_loss: 10.6882 Epoch 21/1000 16/16 [==============================] - 0s 5ms/step - loss: 10.9540 - val_loss: 10.6887 Epoch 22/1000 16/16 [==============================] - 0s 5ms/step - loss: 10.7690 - val_loss: 11.0485 Epoch 23/1000 16/16 [==============================] - 0s 4ms/step - loss: 11.3015 - val_loss: 10.5010 Epoch 24/1000 16/16 [==============================] - 0s 5ms/step - loss: 10.5687 - val_loss: 10.3223 Epoch 25/1000 16/16 [==============================] - 0s 5ms/step - loss: 10.4188 - val_loss: 10.2428 Epoch 26/1000 16/16 [==============================] - 0s 6ms/step - loss: 10.2434 - val_loss: 10.0675 Epoch 27/1000 16/16 [==============================] - 0s 5ms/step - loss: 10.2512 - val_loss: 10.0795 Epoch 28/1000 16/16 [==============================] - 0s 5ms/step - loss: 10.0919 - val_loss: 11.2089 Epoch 29/1000 16/16 [==============================] - 0s 6ms/step - loss: 10.3754 - val_loss: 9.8949 Epoch 30/1000 16/16 [==============================] - 0s 5ms/step - loss: 9.9586 - val_loss: 9.8283 Epoch 31/1000 16/16 [==============================] - 0s 5ms/step - loss: 9.9765 - val_loss: 9.7452 Epoch 32/1000 16/16 [==============================] - 0s 6ms/step - loss: 9.7140 - val_loss: 9.7438 Epoch 33/1000 16/16 [==============================] - 0s 6ms/step - loss: 9.5990 - val_loss: 9.6714 Epoch 34/1000 16/16 [==============================] - 0s 5ms/step - loss: 9.3573 - val_loss: 9.3658 Epoch 35/1000 16/16 [==============================] - 0s 5ms/step - loss: 9.4065 - val_loss: 9.5894 Epoch 36/1000 16/16 [==============================] - 0s 5ms/step - loss: 9.5924 - val_loss: 9.3573 Epoch 37/1000 16/16 [==============================] - 0s 5ms/step - loss: 9.4534 - val_loss: 8.9921 Epoch 38/1000 16/16 [==============================] - 0s 5ms/step - loss: 9.0869 - val_loss: 9.3726 Epoch 39/1000 16/16 [==============================] - 0s 7ms/step - loss: 8.7323 - val_loss: 8.8197 Epoch 40/1000 16/16 [==============================] - 0s 5ms/step - loss: 8.5615 - val_loss: 8.6582 Epoch 41/1000 16/16 [==============================] - 0s 5ms/step - loss: 8.6700 - val_loss: 8.9338 Epoch 42/1000 16/16 [==============================] - 0s 5ms/step - loss: 8.5352 - val_loss: 8.4373 Epoch 43/1000 16/16 [==============================] - 0s 4ms/step - loss: 8.3905 - val_loss: 8.4684 Epoch 44/1000 16/16 [==============================] - 0s 5ms/step - loss: 8.1375 - val_loss: 8.5345 Epoch 45/1000 16/16 [==============================] - 0s 5ms/step - loss: 7.7458 - val_loss: 7.8624 Epoch 46/1000 16/16 [==============================] - 0s 5ms/step - loss: 7.4855 - val_loss: 7.7938 Epoch 47/1000 16/16 [==============================] - 0s 5ms/step - loss: 7.4245 - val_loss: 7.9332 Epoch 48/1000 16/16 [==============================] - 0s 5ms/step - loss: 7.2343 - val_loss: 7.3874 Epoch 49/1000 16/16 [==============================] - 0s 5ms/step - loss: 7.0389 - val_loss: 7.3465 Epoch 50/1000 16/16 [==============================] - 0s 5ms/step - loss: 6.8609 - val_loss: 7.2266 Epoch 51/1000 16/16 [==============================] - 0s 5ms/step - loss: 6.7493 - val_loss: 6.8793 Epoch 52/1000 16/16 [==============================] - 0s 5ms/step - loss: 6.5357 - val_loss: 6.7888 Epoch 53/1000 16/16 [==============================] - 0s 5ms/step - loss: 6.4111 - val_loss: 6.5601 Epoch 54/1000 16/16 [==============================] - 0s 5ms/step - loss: 6.2250 - val_loss: 6.4860 Epoch 55/1000 16/16 [==============================] - 0s 5ms/step - loss: 6.0510 - val_loss: 6.2454 Epoch 56/1000 16/16 [==============================] - 0s 5ms/step - loss: 6.0204 - val_loss: 6.3388 Epoch 57/1000 16/16 [==============================] - 0s 5ms/step - loss: 5.8537 - val_loss: 5.9960 Epoch 58/1000 16/16 [==============================] - 0s 5ms/step - loss: 5.7174 - val_loss: 6.0453 Epoch 59/1000 16/16 [==============================] - 0s 5ms/step - loss: 5.7618 - val_loss: 6.0166 Epoch 60/1000 16/16 [==============================] - 0s 5ms/step - loss: 5.6219 - val_loss: 5.8467 Epoch 61/1000 16/16 [==============================] - 0s 4ms/step - loss: 5.5457 - val_loss: 5.6838 Epoch 62/1000 16/16 [==============================] - 0s 4ms/step - loss: 5.4471 - val_loss: 5.6420 Epoch 63/1000 16/16 [==============================] - 0s 5ms/step - loss: 5.4482 - val_loss: 5.6130 Epoch 64/1000 16/16 [==============================] - 0s 5ms/step - loss: 5.5062 - val_loss: 5.6686 Epoch 65/1000 16/16 [==============================] - 0s 5ms/step - loss: 5.4531 - val_loss: 5.4862 Epoch 66/1000 16/16 [==============================] - 0s 5ms/step - loss: 5.3323 - val_loss: 5.4395 Epoch 67/1000 16/16 [==============================] - 0s 5ms/step - loss: 5.2674 - val_loss: 5.4524 Epoch 68/1000 16/16 [==============================] - 0s 5ms/step - loss: 5.3052 - val_loss: 5.4296 Epoch 69/1000 16/16 [==============================] - 0s 5ms/step - loss: 5.3535 - val_loss: 5.5336 Epoch 70/1000 16/16 [==============================] - 0s 5ms/step - loss: 5.2636 - val_loss: 5.4987 Epoch 71/1000 16/16 [==============================] - 0s 5ms/step - loss: 5.2582 - val_loss: 6.0121

from sklearn.metrics import mean_squared_error

pred=model_DNN.predict(X_train_Std)

mse= mean_squared_error(pred, y_train)

rmse= np.sqrt(mse)

print('rmse of all training: ',rmse)

rmse of all training: 0.9300293868519189

def plot_NN(model,history,x_train,y_train):

""" Plot training loss versus validation loss and

training accuracy versus validation accuracy"""

font = {'size' : 7.5}

plt.rc('font', **font)

fig, ax=plt.subplots(figsize=(7, 5), dpi= 200, facecolor='w', edgecolor='k')

ax1 = plt.subplot(2,2,1)

history_dict = history.history

loss_values = history_dict['loss']

val_loss_values = history_dict['val_loss']

epochs = range(1, len(loss_values) + 1)

ax1.plot(epochs, loss_values, 'bo',markersize=4, label='Training loss')

ax1.plot(epochs, val_loss_values, 'r-', label='Validation loss')

plt.title('Training and validation loss',fontsize=11)

plt.xlabel('Epochs (Early Stopping)',fontsize=9)

plt.ylabel('Loss',fontsize=10)

plt.legend(fontsize='8.5')

plt.ylim((0, 100))

ax2 = plt.subplot(2,2,2)

pred=model.predict(x_train)

t = pd.DataFrame({'pred': pred.flatten(), 'y': y_train.flatten()})

t.sort_values(by=['y'], inplace=True)

epochs = range(1, len(loss_values) + 1)

ax2.plot(t['pred'].tolist(), 'g', label='Prediction')

ax2.plot(t['y'].tolist(), 'm--o',markersize=2, label='Expected')

plt.title('Prediction vs Expected for Training',fontsize=11)

plt.xlabel('Data',fontsize=9)

plt.ylabel('Output',fontsize=10)

plt.legend(fontsize='8.5')

fig.tight_layout(w_pad=1.42)

plt.show()

plot_NN(model_DNN,history,Smaller_Training,Smaller_Training_Target)

# Accuracy on test set

X_test = test_set.drop("Heating Load", axis=1)

y_test = test_set["Heating Load"].values

#

X_test_Std=scaler.transform(X_test)

#

pred=model_DNN.predict(X_test_Std)

mse= mean_squared_error(pred, y_test)

rmse= np.sqrt(mse)

print('rmse of all training of Test: ',rmse)

rmse of all training of Test: 0.9441163085273847